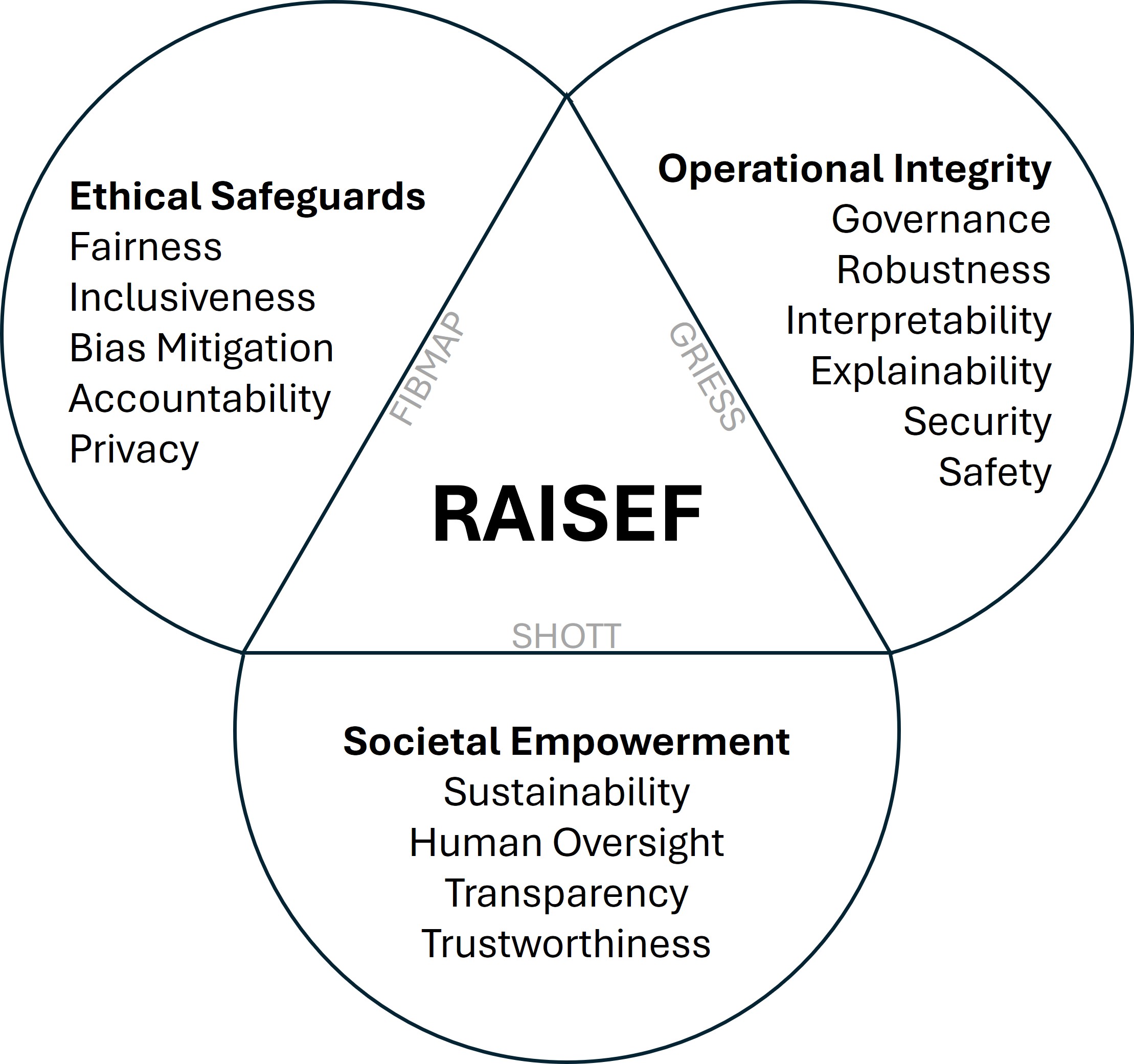

Ethical Safeguards

Principles that keep AI aligned with human values. This pillar focuses on protecting people and their data, ensuring decisions are explainable and contestable, and making responsibility clear. It frames

Fairness | Inclusiveness | Bias Mitigation | Accountability | Privacy