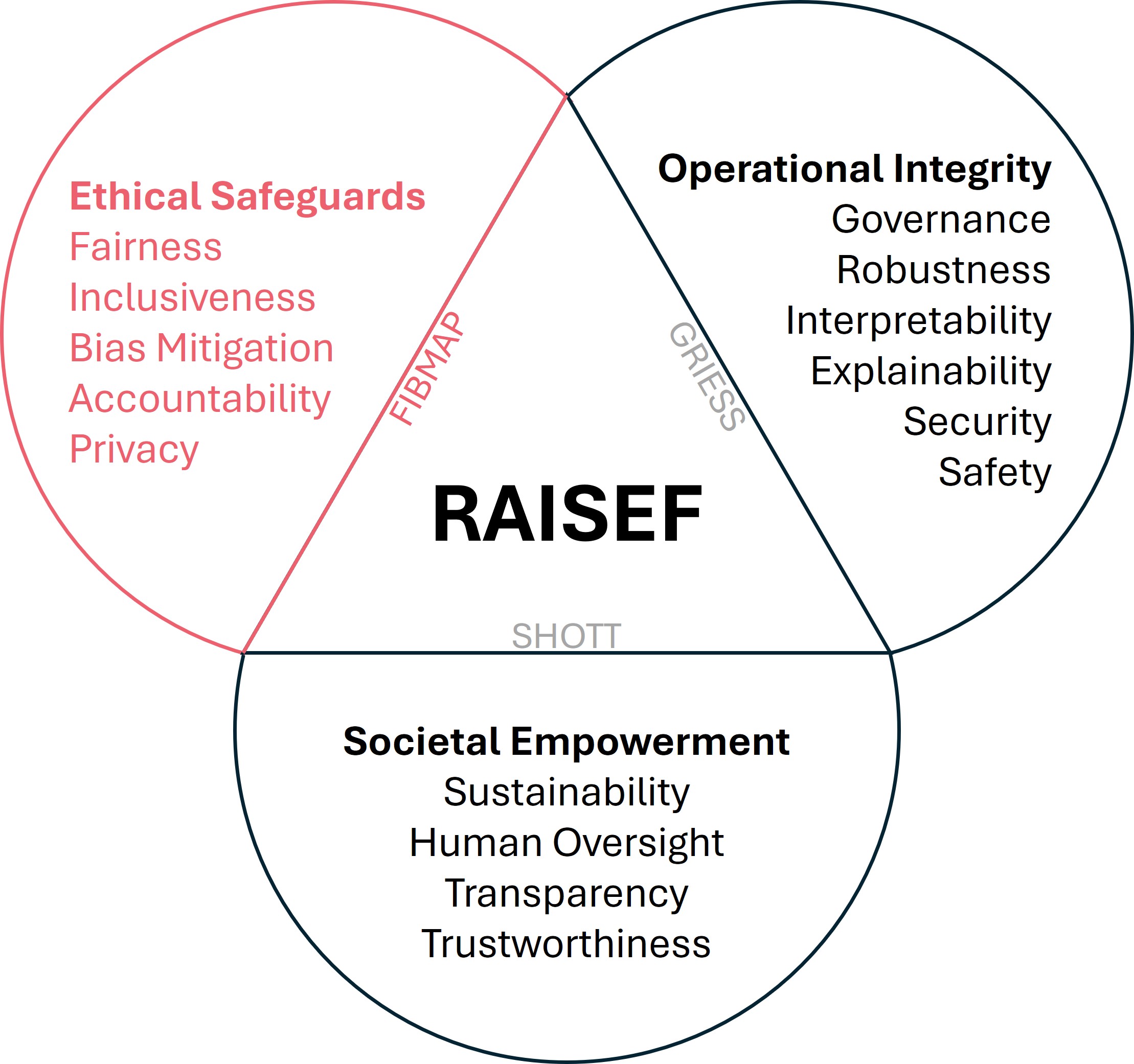

| Inter-Pillar Relationships |

| Pillar: Ethical Safeguards |

|

Accountability vs. Bias Mitigation |

■ Reinforcing

|

Accountability involves ensuring AI systems do not cause harm through biases, closely aligning with bias mitigation efforts to ensure fairness

(Ferrara, 2024 )

|

Regular bias audits reinforce accountability in AI hiring systems, reducing discriminatory outcomes and enhancing fairness

(Cheong, 2024 )

|

|

Accountability vs. Fairness |

■ Reinforcing

|

Accountability requires fairness to ensure equitable AI outcomes, linking the two drivers for ethical AI system design

(Ferrara, 2024 )

|

In financial AI systems, fairness audits enhance accountability by preventing discriminatory lending practices and ensuring equitable treatment

(Saura & Debasa, 2022 )

|

|

Accountability vs. Inclusiveness |

■ Reinforcing

|

Accountability ensures inclusiveness by requiring equitable representation and consideration of diverse perspectives in AI system governance

(Leslie, 2019 )

|

A company implements inclusive decision-making practices, reinforcing accountability by reducing disparities in AI outcomes

(Bullock et al., 2024 )

|

|

Accountability vs. Privacy |

■ Tensioned

|

Accountability can conflict with privacy, as complete transparency might infringe on data protection norms

(Solove, 2025 )

|

Implementing exhaustive audit trails ensures accountability but could compromise individuals’ privacy in sensitive sectors

(Solove, 2025 )

|

|

Bias Mitigation vs. Fairness |

■ Tensioned

|

While both aim to reduce injustices, techniques for fairness (e.g., demographic parity) can sometimes contradict bias mitigation goals

(Ferrara, 2024 )

|

Ensuring demographic parity in hiring algorithms might lead to the over-representation of certain groups, raising concerns about individual fairness

(Dubber et al., 2020 )

|

|

Bias Mitigation vs. Inclusiveness |

■ Reinforcing

|

Bias mitigation supports inclusiveness by actively addressing representation gaps, enhancing fairness across AI applications

(Ferrara, 2024 )

|

Implementing bias audits ensures diverse datasets in educational AI models, promoting inclusiveness

(Ferrara, 2024 )

|

|

Bias Mitigation vs. Privacy |

■ Tensioned

|

Bias mitigation can conflict with privacy when data diversity requires sensitive personal information

(Ferrara, 2024 )

|

Healthcare AI often struggles to balance privacy laws with the need for diverse training data

(Ferrara, 2024 )

|

|

Fairness vs. Inclusiveness |

■ Reinforcing

|

Inclusiveness enhances fairness by broadening AI’s scope to reflect diverse societal elements equitably

(Shams et al., 2023 )

|

Inclusive AI hiring prevents gender disparity by reflecting diversity through fair data representation

(Shams et al., 2023 )

|

|

Fairness vs. Privacy |

■ Tensioned

|

Tensions arise as fairness needs ample data, potentially conflicting with privacy expectations

(Cheong, 2024 )

|

Fair lending AI seeks demographic data for fairness, challenging privacy rights

(Cheong, 2024 )

|

|

Inclusiveness vs. Privacy |

■ Tensioned

|

Privacy-preserving techniques can limit data diversity, compromising inclusiveness

(d’Aliberti et al., 2024 )

|

Differential privacy in healthcare AI might obscure patterns relevant to minority groups

(d’Aliberti et al., 2024 )

|

| Cross-Pillar Relationships |

| Pillar: Ethical Safeguards vs. Operational Integrity |

|

Accountability vs. Explainability |

■ Reinforcing

|

Accountability promotes explainability by requiring justifications for AI decisions, fostering transparency and informed oversight

(Busuioc, 2021 )

|

Implementing clear explanations in credit scoring ensures accountability and compliance with regulations, enhancing stakeholder trust

(Cheong, 2024 )

|

|

Accountability vs. Governance |

■ Reinforcing

|

Governance frameworks incorporate accountability to ensure decisions in AI systems are monitored, traceable, and responsibly managed

(Bullock et al., 2024 )

|

An AI firm employs governance logs to ensure accountable decision-making, facilitating regulatory compliance and stakeholder trust

(Dubber et al., 2020 )

|

|

Accountability vs. Interpretability |

■ Reinforcing

|

Accountability and interpretability enhance transparency and trust, essential for effective AI system governance

(Dubber et al., 2020 )

|

In finance, regulators use interpretable AI to ensure banks’ accountability by tracking decisions

(Ananny & Crawford, 2018 )

|

|

Accountability vs. Robustness |

■ Tensioned

|

Accountability’s demand for traceability may compromise robustness by exposing sensitive vulnerabilities

(Leslie, 2019 )

|

In autonomous vehicles, robust privacy measures can conflict with traceability required for accountability

(Busuioc, 2021 )

|

|

Accountability vs. Safety |

■ Reinforcing

|

Accountability ensures AI safety by demanding human oversight and system verification, enhancing procedural safeguards

(Leslie, 2019 )

|

In autonomous vehicles, accountability through rigorous standards enhances safety measures ensuring fail-safe operations

(Leslie, 2019 )

|

|

Accountability vs. Security |

■ Reinforcing

|

Accountability enhances security by ensuring responsible data management and risk identification

(Voeneky et al., 2022 )

|

Regular audits on AI systems’ security protocols ensure accountability and safety for data governance

(Voeneky et al., 2022 )

|

|

Bias Mitigation vs. Explainability |

■ Tensioned

|

Bias mitigation can obscure model operations, conflicting with the transparency needed for explainability

(Rudin, 2019 )

|

In high-stakes justice applications, improving model explainability can compromise bias mitigation efforts

(Busuioc, 2021 )

|

|

Bias Mitigation vs. Governance |

■ Reinforcing

|

Governance frameworks regularly incorporate bias mitigation strategies, reinforcing ethical AI implementation

(Ferrara, 2024 )

|

AI governance policies in finance often include bias audits, ensuring ethical compliance

(Ferrara, 2024 )

|

|

Bias Mitigation vs. Interpretability |

■ Reinforcing

|

Interpretability aids bias detection, supporting equitable AI systems by elucidating model decisions

(Ferrara, 2024 )

|

Interpretable healthcare models reveal biases in diagnostic outputs, promoting fair treatment

(Ferrara, 2024 )

|

|

Bias Mitigation vs. Robustness |

■ Reinforcing

|

Bias mitigation enhances robustness by incorporating diverse data, reducing systematic vulnerabilities in AI models

(Ferrara, 2024 )

|

Inclusive datasets in AI model training improve both bias mitigation and system robustness

(Ferrara, 2024 )

|

|

Bias Mitigation vs. Safety |

■ Reinforcing

|

Bias mitigation increases safety by addressing discrimination risks, central to safe AI deployment

(Ferrara, 2024 )

|

Ensuring fair training data mitigates bias-related risks in AI models, enhancing safety in autonomous vehicles

(Ferrara, 2024 )

|

|

Bias Mitigation vs. Security |

■ Reinforcing

|

Bias mitigation enhances security by reducing vulnerabilities that arise from discriminatory models

(Habbal et al., 2024 )

|

Including bias audits in AI-driven fraud detection systems strengthens security protocols

(Habbal et al., 2024 )

|

|

Explainability vs. Fairness |

■ Reinforcing

|

Explainability assists in ensuring fairness by elucidating biases, enabling equitable AI systems

(Ferrara, 2024 )

|

In credit scoring, explainable models help identify discrimination, promoting fairer lending practices

(Ferrara, 2024 )

|

|

Explainability vs. Inclusiveness |

■ Reinforcing

|

Explainability promotes inclusiveness by making AI decisions understandable, encouraging equitable stakeholder participation

(Shams et al., 2023 )

|

Explainable AI models help identify underrepresented groups’ needs, ensuring inclusive design in public policy

(Shams et al., 2023 )

|

|

Explainability vs. Privacy |

■ Tensioned

|

Explainability can jeopardize privacy by revealing sensitive algorithm details

(Solove, 2025 )

|

Disclosing algorithm logic in healthcare AI might infringe patient data privacy

(Solove, 2025 )

|

|

Fairness vs. Governance |

■ Reinforcing

|

Governance ensures fairness by establishing regulatory frameworks that guide AI systems towards unbiased practices

(Cath, 2018 )

|

The EU AI Act mandates fairness algorithms under governance to prevent discrimination in employment

(Cath, 2018 )

|

|

Fairness vs. Interpretability |

■ Reinforcing

|

Interpretability fosters fairness by making opaque AI systems comprehensible, allowing equitable scrutiny and accountability

(Binns, 2018 )

|

Interpretable algorithms in credit scoring identify biases, supporting fairness standards and promoting equitable lending

(Bateni et al., 2022 )

|

|

Fairness vs. Robustness |

■ Tensioned

|

Fairness might necessitate modifications that decrease robustness

(Tocchetti et al., 2022 )

|

Adjustments to AI models for fairness in loan approvals might reduce performance across datasets

(Braiek & Khomh, 2024 )

|

|

Fairness vs. Safety |

■ Tensioned

|

Fairness can conflict with safety since safety may require restrictive measures that impact equitable access

(Leslie, 2019 )

|

Self-driving algorithms balanced between passenger safety and fair pedestrian detection can lead to safety and fairness trade-offs

(Cath, 2018 )

|

|

Fairness vs. Security |

■ Tensioned

|

Fairness needs data transparency, often conflicting with strict security protocols prohibiting data access

(Leslie et al., 2024 )

|

Ensuring fair user data access can compromise data security boundaries, posing organizational security risks

(Leslie et al., 2024 )

|

|

Governance vs. Inclusiveness |

■ Reinforcing

|

Governance frameworks establish inclusive participation, ensuring representation of various groups in AI decision-making

(Bullock et al., 2024 )

|

Governance mandates diverse stakeholder involvement to ensure inclusiveness in AI policy development

(Zowghi & Da Rimini, 2024 )

|

|

Governance vs. Privacy |

■ Tensioned

|

Governance mandates can challenge privacy priorities, as regulations may require data access contrary to privacy safeguards

(Mittelstadt, 2019 )

|

Regulatory monitoring demands could infringe on personal privacy by requiring detailed data disclosures for compliance

(Solow-Niederman, 2022 )

|

|

Inclusiveness vs. Interpretability |

■ Reinforcing

|

Interpretability enriches inclusiveness by ensuring AI systems are understandable, fostering wide accessibility and equitable application

(Shams et al., 2023 )

|

Interpretable AI frameworks enable diverse communities’ meaningful engagement by clarifying system decisions, supporting inclusive practices

(Cheong, 2024 )

|

|

Inclusiveness vs. Robustness |

■ Tensioned

|

Inclusiveness may compromise robustness by prioritizing accessibility over resilience in challenging scenarios

(Leslie, 2019 )

|

AI built for varied groups might underperform in rigorous environments, highlighting tension

(Leslie, 2019 )

|

|

Inclusiveness vs. Safety |

■ Reinforcing

|

Inclusiveness motivates safety by ensuring diverse needs are considered, enhancing overall safety standards

(Fosch-Villaronga & Poulsen, 2022 )

|

Inclusive AI health tools accommodate diverse groups, improving overall safety and personalized healthcare

(World Health Organization, 2021 )

|

|

Inclusiveness vs. Security |

■ Tensioned

|

Inclusiveness in AI can expose it to vulnerabilities, challenging security measures

(Fosch-Villaronga & Poulsen, 2022 ;

Zowghi & Da Rimini, 2024 )

|

Inclusive AI systems might prioritize accessibility but compromise security, as noted when addressing diverse infrastructures

(Microsoft, 2022 )

|

|

Interpretability vs. Privacy |

■ Tensioned

|

Privacy constraints often limit model transparency, complicating interpretability

(Cheong, 2024 )

|

In healthcare, strict privacy laws can impede clear interpretability, affecting decisions on patient data

(Wachter & Mittelstadt, 2019 )

|

|

Privacy vs. Robustness |

■ Tensioned

|

Achieving high privacy can sometimes challenge robustness by limiting data availability

(Hamon et al., 2020 )

|

Differential privacy techniques may decrease robustness, impacting AI model performance in varied conditions

(Hamon et al., 2020 )

|

|

Privacy vs. Safety |

■ Tensioned

|

Balancing privacy protection with ensuring safety can cause ethical dilemmas in AI systems

(Bullock et al., 2024 )

|

AI in autonomous vehicles must handle data privacy while addressing safety features

(Bullock et al., 2024 )

|

|

Privacy vs. Security |

■ Reinforcing

|

Both privacy and security strive for safeguarding sensitive data, aligning objectives

(Hu et al., 2021 )

|

Using encryption methods, AI systems ensure privacy while maintaining security, protecting data integrity

(Hu et al., 2021 )

|

| Pillar: Ethical Safeguards vs. Societal Empowerment |

|

Accountability vs. Human Oversight |

■ Reinforcing

|

Accountability necessitates human oversight for ensuring responsible AI operations, requiring active human involvement and supervision

(Leslie, 2019 )

|

AI systems in healthcare employ human oversight for accountable decision-making, preventing potential adverse outcomes

(Novelli et al., 2024 )

|

|

Accountability vs. Sustainability |

■ Reinforcing

|

Accountability aligns with sustainability by ensuring responsible practices that support ecological integrity and social justice

(van Wynsberghe, 2021 )

|

Implementing accountable AI practices reduces carbon footprint while enhancing brand trust through sustainable operations

(van Wynsberghe, 2021 )

|

|

Accountability vs. Transparency |

■ Reinforcing

|

Transparency supports accountability by enabling oversight and verification of AI systems’ behavior

(Dubber et al., 2020 )

|

In algorithmic finance, transparency enables detailed audits for accountability, curbing unethical financial practices

(Dubber et al., 2020 )

|

|

Accountability vs. Trustworthiness |

■ Reinforcing

|

Accountability builds trustworthiness by enhancing transparency and integrity in AI operations

(Schmidpeter & Altenburger, 2023 )

|

AI systems with clear accountability domains are generally more trusted in healthcare settings

(Busuioc, 2021 )

|

|

Bias Mitigation vs. Human Oversight |

■ Reinforcing

|

Human oversight supports bias mitigation by ensuring continual auditing to detect and address biases

(Ferrara, 2024 )

|

In hiring AI, human oversight helps identify bias in training data biases, enhancing fairness

(Ferrara, 2024 )

|

|

Bias Mitigation vs. Sustainability |

■ Reinforcing

|

Bias mitigation supports sustainability by fostering fair access to AI benefits, reducing societal imbalances

(Rohde et al., 2023 )

|

Ensuring AI equitable data distribution reduces systemic biases, contributing to sustainable growth

(Rohde et al., 2023 )

|

|

Bias Mitigation vs. Transparency |

■ Reinforcing

|

Bias mitigation relies on transparency to ensure fair AI systems by revealing discriminatory patterns

(Ferrara, 2024 )

|

Transparent algorithms in recruitment help identify bias in decision processes, ensuring fair practices

(Ferrara, 2024 )

|

|

Bias Mitigation vs. Trustworthiness |

■ Reinforcing

|

Bias mitigation fosters trustworthiness by addressing discrimination, thereby improving user confidence in AI systems

(Ferrara, 2024 )

|

In lending AI, bias audits enhance algorithm reliability, fostering trust among users and stakeholders

(Ferrara, 2024 )

|

|

Fairness vs. Human Oversight |

■ Reinforcing

|

Human oversight supports fairness by ensuring AI decisions reflect equitable practices grounded in human judgment

(Voeneky et al., 2022 )

|

For recruitment AI, human oversight calibrates fairness, reviewing bias mitigation strategies before final implementation

(Bateni et al., 2022 )

|

|

Fairness vs. Sustainability |

■ Reinforcing

|

Fairness supports sustainability by advocating equitable resource distribution, essential for sustainable AI solutions

(Schmidpeter & Altenburger, 2023 )

|

AI systems ensuring fair access to renewable energy results underscore this synergy

(van Wynsberghe, 2021 )

|

|

Fairness vs. Transparency |

■ Reinforcing

|

Transparency in AI increases fairness by allowing for the identification and correction of biases

(Ferrara, 2024 )

|

Transparent hiring algorithms enable fairness by revealing discriminatory patterns in recruitment practices

(Lu et al., 2024 )

|

|

Fairness vs. Trustworthiness |

■ Reinforcing

|

Fairness enhances trustworthiness by promoting equal treatment, diminishing bias, thus fostering confidence in AI systems

(Cheong, 2024 )

|

Mortgage AI with fair credit evaluations strengthens trustworthiness, ensuring non-discriminatory decisions for applicants

(Dubber et al., 2020 )

|

|

Human Oversight vs. Inclusiveness |

■ Reinforcing

|

Human oversight promotes inclusiveness by ensuring diverse perspectives shape AI ethics and implementation

(Dubber et al., 2020 )

|

Human oversight in AI enhances inclusiveness by involving diverse stakeholder consultations during system development

(Zowghi & Da Rimini, 2024 )

|

|

Human Oversight vs. Privacy |

■ Tensioned

|

Human oversight might collide with privacy, requiring access to sensitive data for supervision

(Solove, 2025 )

|

AI deployment often requires human oversight conflicting with privacy norms to evaluate sensitive data algorithms

(Dubber et al., 2020 )

|

|

Inclusiveness vs. Sustainability |

■ Reinforcing

|

Inclusive AI development inherently supports sustainable goals by considering diverse needs and reducing inequalities

(van Wynsberghe, 2021 )

|

AI initiatives promoting inclusivity often align with sustainability, as seen in projects that address accessibility in green technologies

(van Wynsberghe, 2021 )

|

|

Inclusiveness vs. Transparency |

■ Reinforcing

|

Both inclusiveness and transparency promote equitable access and understanding in AI, enhancing collaborative growth

(Buijsman, 2024 )

|

Diverse teams enhance transparency tools in AI systems, ensuring fair representation and increased public understanding

(Buijsman, 2024 )

|

|

Inclusiveness vs. Trustworthiness |

■ Tensioned

|

Trust-building measures, like rigorous security checks, can marginalize less-privileged stakeholders

(Bullock et al., 2024 )

|

Expensive trust audits in AI systems may exclude smaller organizations from participation

(Dubber et al., 2020 )

|

|

Privacy vs. Sustainability |

■ Tensioned

|

Privacy demands limit data availability, hindering AI’s potential to achieve sustainability goals

(van Wynsberghe, 2021 )

|

Strict privacy laws restrict data collection necessary for AI to optimize urban energy use

(Bullock et al., 2024 )

|

|

Privacy vs. Transparency |

■ Tensioned

|

High transparency can inadvertently compromise user privacy

(Cheong, 2024 )

|

Algorithm registries disclose data sources but risk exposing personal data

(Buijsman, 2024 )

|

|

Privacy vs. Trustworthiness |

■ Reinforcing

|

Privacy measures bolster trustworthiness by safeguarding data against misuse, fostering user confidence

(Lu et al., 2024 )

|

Adopting privacy-centric AI practices enhances trust by ensuring user data isn’t exploited deceptively

(Lu et al., 2024 )

|